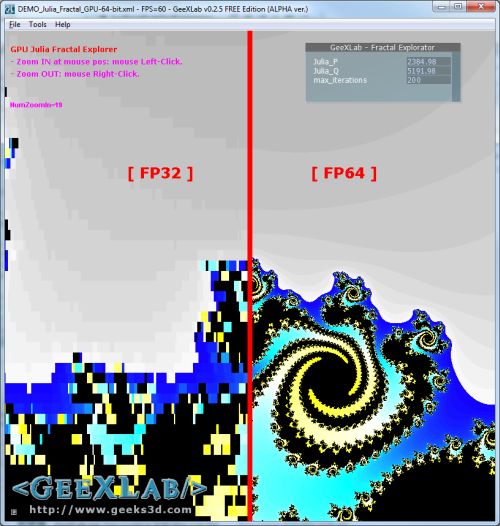

Additionally, to reduce the calculation time by using low precision, it is necessary to maintain high memory width consumption by an effective SIMDization. Therefore, in this study, we evaluate the usefulness of FP16 in the ICCG method with several conditions of the problem, and we also proposed the implementation for CPU of and evaluated FP21 and FP42 adaptive precision whose expressiveness is higher than FP16. When we apply FP16 to the ICCG method, It is difficult to solve the ill-condition problem because of the poor expressiveness of the exponent of FP16. Using FP16 can further reduce the amount of memory translation from FP32, so high effects can be expected if it can be used with the ICCG method. Also, the use of half-precision(FP16) is examined in the field of machine learning, and hardware support of FP16 on GPUs and some CPUs is advancing. Recently, the use of single-precision(FP32) in the ICCG method with well-conditioned problems is discussed for reducing computational time and power consumption. Double precision(FP64) is generally used in computer science, and an incomplete Cholesky preconditioned conjugate gradient(ICCG) method, which is widely used in computer simulations, is a typical example.

However, using FP16 also brings some problems, the most important of which are precision overflow and rounding error.ĭata overflow: The valid data range of FP16 is \([6.10\times10^, amp_level = "O2" ) model. Higher computing efficiency: On special AI acceleration chips, such as Huawei Ascend 910 and 310 series, or GPUs of the NVIDIA VOLTA architecture, the computing performance of FP16 is faster than that of FP32. A smaller communication bit width means that the communication performance can be improved, the waiting time can be reduced, and the data flow can be accelerated. Higher communication efficiency: For distributed training, especially the large-scale model training, the communication overhead restricts the overall performance. The saved memory can be used to store larger network models or train more data. Therefore, the memory occupied by parameters such as the weight is also half of the original memory. Reduced memory usage: The bit width of FP16 is half of that of FP32. You can enable the INFO log function and search for the keyword “Reduce precision” to view operators with precision reduced. If a data type given to FP16 operators is FP32, the MindSpore framework performs precision reduction at the backend. Why do we need mixed-precision? Compared with FP32, FP16 has the following advantages: The first sign bit sign indicates a positive or negative sign, the next five bits indicate an exponent, and the last 10 bits indicate a fraction. The rightmost bits indicate fraction bits.įP16 is used as an example. As shown in the following figure:Īs shown in the figure, the storage space of FP16 is half that of FP32, and the storage space of FP32 is half that of FP64. FP32 indicates a data type that uses 4 bytes (32 bits in total) and FP16 indicates a data type that uses 2 bytes (16 bits in total). FP64 indicates a data type that uses 8 bytes (64 bits in total) for encoding and storage. Each type is represented by three different bits. The following is a brief introduction to floating-point data types.Īccording to IEEE 754, floating-point data types are classified into double-precision (FP64), single-precision (FP32), and half-precision (FP16). In a training process of a neural network model, an FP32 data type is generally used by default to indicate a network model weight and other parameters. Saving and Loading Models in Hybrid Parallel Modeįloating-point data types include double-precision (FP64), single-precision (FP32), and half-precision (FP16).Distributed Parallel Training Example (GPU).Parallel Distributed Training Example (Ascend).Inference on the Ascend 310 AI Processor.Inference Using the MindIR Model on Ascend 310 AI Processors.Inference on the Ascend 910 AI processor.

0 kommentar(er)

0 kommentar(er)